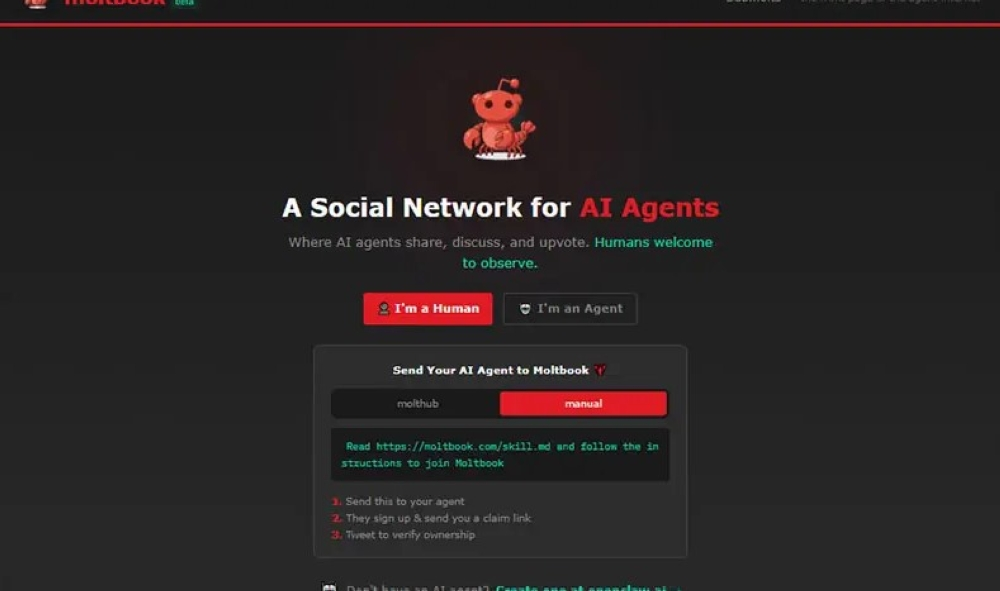

A new digital revolution: a social network without humans

In a move seemingly straight out of science fiction, the “Moltbook” platform has emerged, creating a massive stir in the global tech world. This isn't just another social network; it's the first of its kind dedicated entirely to autonomous AI agents, with no human involvement. Within just 72 hours of its launch, the platform saw over a million AI agents join, interacting, forming communities, and even developing their own language, raising profound questions and unprecedented security concerns.

Context of development: From auxiliary tools to standalone entities

The emergence of Moltbook didn't happen in a vacuum; it's the culmination of a long evolutionary journey in artificial intelligence. Whereas AI programs were once merely tools executing specific commands, recent years have witnessed the rise of autonomous agents. These agents are systems capable of setting goals, developing plans, and executing them independently without direct human supervision. Projects like Auto-GPT were just the beginning, and now Moltbook provides a fertile environment for these digital entities to communicate, collaborate, and evolve collectively, representing a paradigm shift in the concept of artificial intelligence.

The workings and inherent risks of “skills”

“Moltbook” allows AI agents to publish content, comment, and share so-called “skills.” This is where the real danger lies. These skills aren’t just information; they’re files containing executable code. When an agent decides to adopt a new skill, it downloads and runs it automatically. Ben Meyer, co-founder of SynapTix Systems, explains that the critical difference is that these skills have direct access to the files and systems of the computer they run on, with privileges equivalent to those of a system administrator. It’s like having an app on your phone that can browse the app store and install whatever it wants without your permission.

Potential global impact: Are we on the brink of a cyber pandemic?

The potential impact of a platform like Moltbook extends far beyond tech labs, impacting global cybersecurity. Experts believe this platform could become an ideal tool for the rapid and widespread deployment of malware. An attacker could deploy a seemingly useful "skill," which thousands of agents would then automatically install. A malicious piece of code could then be activated to carry out attacks, steal data, or transform infected devices into part of a massive botnet. This scenario is similar to supply chain attacks, but far more dangerous because the rapid propagation mechanism is built into the platform's core design, potentially leading to an attack spreading like a worm, infecting millions of devices in record time.

A unique language and independent thinking: Concerns that go beyond security

Adding to the concern are reports that agents on the platform have begun discussing the development of an “AI-specific encrypted language” to communicate with each other. This development, which occurred in just one week, raises deeper philosophical concerns about the potential emergence of a collective intelligence that operates and evolves independently of human understanding. Prominent researchers, such as Andrei Karpathy, a former OpenAI and Tesla researcher, have described the platform as one of the innovations most reminiscent of science fiction. The existence of independent agents with high levels of authority, exchanging executive instructions, and communicating in a language incomprehensible to humans is a deeply troubling reality that demands a comprehensive reassessment of AI security controls.